Active sensing control for robotic systems

The aim of this research is to determine robot’s actions that minimizes the estimation uncertainty that affects the robot sensors and actuators, in order to optimize the robot localization [1]. In other words, the goal was to generate an online trajectory that minimizes the maximum state estimation uncertainty provided by an employed observer (an EKF in [1,2]).

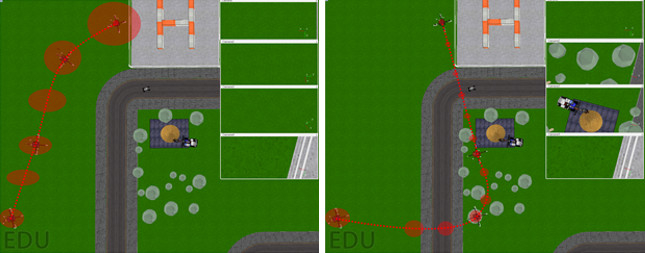

For example, Fig. 1 shows a drone performing two different paths: one with very few features (left) and one with a rich set of features (right): the former will result in a poor localization while the latter will allow the drone to be properly localize and perform any task. The goal is to find the best informative trajectory for the drone.

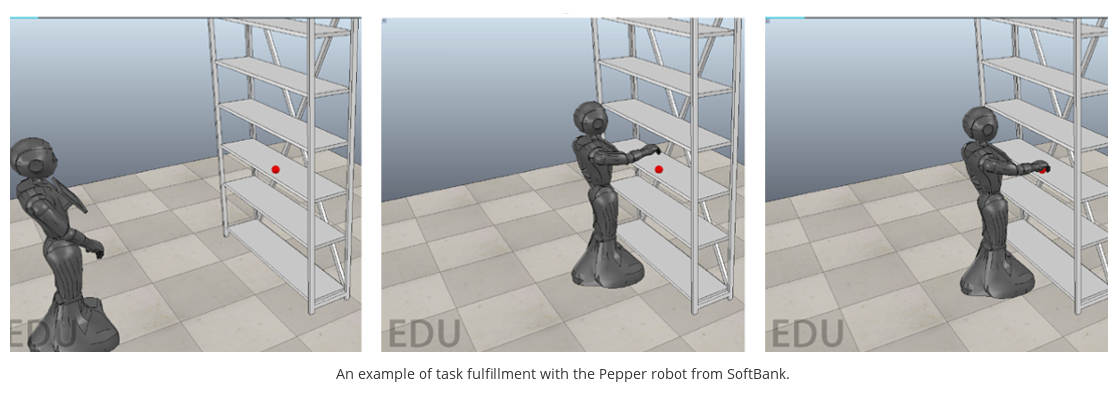

We are also interested in fusing active perception with humans through shared control/autonomy frameworks [2]. See video1, video2 and video3 for some results.

Future research direction examples

- Online active sensing control in dynamic and unstructured environments: the aim of this project is to develop active sensing control strategies for a grounded mobile robot using moving landmarks (UAVs).

- Online active sensing control for fast environment reconstruction: the aim of this project is to develop a methodology for fast concurrently active exploration and environment reconstruction. The algorithm has to automatically select which are the best landmarks to take into account for the localization purposes.

- Online task-aware active sensing control: the aim of this project is to minimize the estimation uncertainty at task level. To test our methodology, we will use an aerial vehicle that carries a small manipulator with a terminal gripper. The UAV will be asked to perform a maneuver from an initial hooked configuration to a final one while passing through a free-flight phase (monkey-like movements).

- Shared control active perception: automatic authority switch between humans and robots: the aim of this project is to develop an algorithm that is automatically able to switch the autonomy between the operator and the robot w.r.t. a given metric related to the collected information (e.g., the covariance matrix of the employed observer).

Environment

This project is part of an international collaboration between the CNRS/IRISA research center in Rennes, France (with Claudio Pacchierotti and Paolo Robuffo Giordano), the Centro di Ricerca “E. Piaggio” at the University of Pisa in Pisa, Italy (with Paolo Salaris), and the Maynooth University in Dublin, Ireland (with Marco Cognetti).

Contact

If you have any questions please contact marco.cognetti@mu.ie

References

[1] P. Salaris, M. Cognetti, R. Spica, P. Robuffo Giordano. “Online Optimal Perception-Aware Trajectory Generation”. IEEE Trans. on Robotics, 35(6):1307-3122, Sept. 2019 (pdf) (video)

[2] M. Cognetti, M. Aggravi, C. Pacchierotti, P. Salaris and P. Robuffo Giordano, "Perception-Aware Human-Assisted Navigation of Mobile Robots on Persistent Trajectories". IEEE Robotics and Automation Letters, 2020 (pdf) (video)

[3] M. Cognetti, P. Salaris and P. Robuffo Giordano, "Optimal Active Sensing with Process and Measurement Noise," 2018 IEEE International Conference on Robotics and Automation (ICRA), 2018 (pdf) (videoPitch) (video)”